New dataset on personality computing released:

AI-driven interfaces

RAI is leading the machine intelligence work-package in MaTHiSiS. For this, RAI conducts research on intelligent tools for learning, based on automatic human emotion recognition and personalization of the learning procedure, based on AI:

Activity recognition

RAI, as part of the ICT4Life project, is conducting research in Ambient Assisted Living, using computer vision. The latest project is in anomaly detection in indoor activities, with an outer call to provide the caregivers with timely messages and warnings:

TurtleBot learning application in MaTHiSiS project

UM has developed several applications to be using in the context of MaTHiSiS platform. These application will be used in learning environments by chidren with and without special needs (namely, Autism Spectrum Disorder and Profound and Multiple Learning Disabilities).

You can see the robot application during one of the tests performed in DKE facilities:

Abnormal behavior Detector in ICT4Life Platform

We present a realtime algorithm to detect common Alzheimer’s symptoms such as confused and repetitive behaviors. Our model takes as input Kinect video data as well as ambient sensors and produces a behavior classification output.

In this demo we discriminate between normal daily activities and common behavioral symptoms of Alzheimer ‘s disease.

More information at: www.ict4life.eu

The Head Pose – Eye Gaze dataset (HPEG) has been built in order to assist in research related to human gaze recognition, with monocular, uncalibrated systems, under the constraint of free head and eye gaze rotations.

You can download HPEG (Head Pose & Eye Gaze dataset) from here. The dataset consists of two sessions: one just head pose of people moving freely their heads, and one combining both cues (Head Pose and Eye Gaze). It was recorded in indoor conditions, with a complex background and intense human action taking place in some of the sequences. For more details, please, read the paper found in this paper.

The dataset can be freely downloaded and used for research purposes, and provided that any work done with the dataset should cite the following work:

Asteriadis, Stylianos, Dimitris Soufleros, Kostas Karpouzis, and Stefanos Kollias. “A natural head pose and eye gaze dataset.” In Proceedings of the International Workshop on Affective-Aware Virtual Agents and Social Robots, p. 1. ACM, 2009.

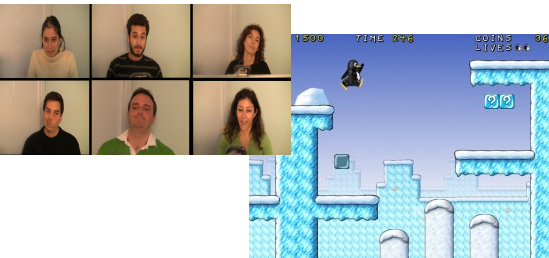

We present the Platformer Experience Dataset (PED) — the first open-access game experience corpus — that contains multiple modalities of user data of Super Mario Bros players. The open-access database aims to be used for player experience capture through context based (i.e. game content), behavioral and visual recordings of platform game players. In addition, the database contains demographical data of the players and self-reported annotations of experience in two forms: ratings and ranks. PED opens up the way to desktop and console games that use video from web cameras and visual sensors and offer possibilities for holistic player experience modeling approaches that can, in turn, yield richer game personalization.

The dataset is made publicly available here and we encourage researchers to use it for testing their own player experience detectors.

The dataset is presented in the following paper (please cite our work if you use the dataset for your research):

- Karpouzis, G. Yannakakis, N Shaker, S. Asteriadis. The Platformer Experience Dataset, Sixth Affective Computing and Intelligent Interaction (ACII) Conference, Xi’an, China, 21-24 September, 2015.